Developing a Smart Walking Stick for the Visually Impaired

Developing a Smart Walking Stick for the Visually Impaired

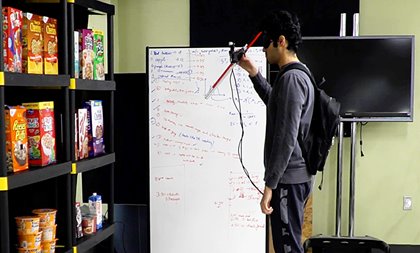

Camera mounted on cane handle helps visually impaired identify objects.

There is much research being done on helping the visually impaired better navigate their world. Sensors, haptics, cameras, and other technology are being fitted onto clothes, glasses, and walking sticks to help people better move around. An effort by researchers at the University of Colorado, Boulder, takes the work to a more nuanced level: identifying products on a shelf.

Bradley Hayes, assistant professor of computer science, and his Ph.D. student Shivendra Agrawal, are working with the standard white and red cane used by visually impaired people, fitting it with a camera and computer vision. Using a limited data base of common grocery store products, they’ve successfully developed a system that identifies a product on a shelf, tells the user the distance to the shelf, and then gives vocal instructions on locating and picking up the item.

“There have been plenty of people innovating in the space,” Hayes said. “We spent quite a while talking about the pros and cons of existing solutions. We started thinking about the standard white cane. This is something useful even without the power in our sensors, so we can augment this.”

Together with team members of the university’s Collaborative Artificial Intelligence and Robotics Lab, they fitted a cane with a camera and computer vision technology. For navigation, it guides users with vibrations in the handle and spoken directions.

“It is helpful to think of the cane as having two modes right now,” Hayes said. “One of them is actually walking around, getting from Point A to Point B. The second one is helping actually pick stuff off shelves or tables and doing manipulation guidance.”

The team mounted a deaf camera close to the cane’s handle to give it perception, and vibration motors near where the user’s thumb goes on the left and right sides of the thumb pad. “We combine all of that with the speaker for audio feedback and we figured there is a vast amount of relevant tasks we could address,” Hayes said.

Become a Member: How to Join ASME

To test the device, the team first set up a makeshift café, with tables, seats, and patrons. The device, for now, is powered through a computer fitted into a backpack. Subjects were blindfolded before walking into the “café” where they swiveled the walking stick around the room. Algorithms successfully identified the room’s features and guided the user to an ideal seat in 10 out of 12 trials. It found at least one open chair in every trial.

Those results were presented last year at the International Conference on Intelligent Robots and Systems. Later work yet to be published tested the smart walking stick in a grocery store setting. Team members built a version of grocery shelves and created a data base of cereal and canned foods. Users were again blindfolded, and lifted the stick to scan the shelves. It then identified a product and told the user to move to the right or left to pick it up.

“The cane provides two kinds of guidance but can also provide richer information using audio. So it issues key commands, like ‘Move a bit to your left, move six inches forward,' and asking them to grab the object,” Agrawal noted. “We see this as a control mechanism for essentially [using] the cane to make the person move. We kind of envision this as an under-actuated robotic system.”

More for You: Combustion Makes Braille Mobile

Hayes noted that the cane needs more work before it can be introduced to the visually impaired community. For one, controls need to be miniaturized. One of guiding principles of the work is that the device can still be used if the controls are lost.

As an example, he pointed to previous work done with a cane fitted with a wheel at the end that pulled and guided the user. “We think that is a really cool design, but the design violated one of the requirements that we put on ourselves, which was that you should still be able to use the device for its productive purpose, even if the power is off,” Hayes said.

Hayes believes the work reinforces the belief that foundational technologies that enable autonomous field robots apply directly to the problem of augmenting existing tools. “Things like localization and navigation, avoiding obstacles, perception: All of these sort of core robotics concepts can operationalized in one of these assistive devices,” he said.

The next immediate steps in development are to be determined. The team is looking for more collaborators as Agrawal is due to graduate within the next year.

“We’re going to be building more prototype models of this to hand out to those collaborators to do their own capability extensions,” Hayes said.

John Kosowatz is senior editor.

Bradley Hayes, assistant professor of computer science, and his Ph.D. student Shivendra Agrawal, are working with the standard white and red cane used by visually impaired people, fitting it with a camera and computer vision. Using a limited data base of common grocery store products, they’ve successfully developed a system that identifies a product on a shelf, tells the user the distance to the shelf, and then gives vocal instructions on locating and picking up the item.

“There have been plenty of people innovating in the space,” Hayes said. “We spent quite a while talking about the pros and cons of existing solutions. We started thinking about the standard white cane. This is something useful even without the power in our sensors, so we can augment this.”

Together with team members of the university’s Collaborative Artificial Intelligence and Robotics Lab, they fitted a cane with a camera and computer vision technology. For navigation, it guides users with vibrations in the handle and spoken directions.

“It is helpful to think of the cane as having two modes right now,” Hayes said. “One of them is actually walking around, getting from Point A to Point B. The second one is helping actually pick stuff off shelves or tables and doing manipulation guidance.”

The team mounted a deaf camera close to the cane’s handle to give it perception, and vibration motors near where the user’s thumb goes on the left and right sides of the thumb pad. “We combine all of that with the speaker for audio feedback and we figured there is a vast amount of relevant tasks we could address,” Hayes said.

Become a Member: How to Join ASME

To test the device, the team first set up a makeshift café, with tables, seats, and patrons. The device, for now, is powered through a computer fitted into a backpack. Subjects were blindfolded before walking into the “café” where they swiveled the walking stick around the room. Algorithms successfully identified the room’s features and guided the user to an ideal seat in 10 out of 12 trials. It found at least one open chair in every trial.

Those results were presented last year at the International Conference on Intelligent Robots and Systems. Later work yet to be published tested the smart walking stick in a grocery store setting. Team members built a version of grocery shelves and created a data base of cereal and canned foods. Users were again blindfolded, and lifted the stick to scan the shelves. It then identified a product and told the user to move to the right or left to pick it up.

“The cane provides two kinds of guidance but can also provide richer information using audio. So it issues key commands, like ‘Move a bit to your left, move six inches forward,' and asking them to grab the object,” Agrawal noted. “We see this as a control mechanism for essentially [using] the cane to make the person move. We kind of envision this as an under-actuated robotic system.”

More for You: Combustion Makes Braille Mobile

Hayes noted that the cane needs more work before it can be introduced to the visually impaired community. For one, controls need to be miniaturized. One of guiding principles of the work is that the device can still be used if the controls are lost.

As an example, he pointed to previous work done with a cane fitted with a wheel at the end that pulled and guided the user. “We think that is a really cool design, but the design violated one of the requirements that we put on ourselves, which was that you should still be able to use the device for its productive purpose, even if the power is off,” Hayes said.

Hayes believes the work reinforces the belief that foundational technologies that enable autonomous field robots apply directly to the problem of augmenting existing tools. “Things like localization and navigation, avoiding obstacles, perception: All of these sort of core robotics concepts can operationalized in one of these assistive devices,” he said.

The next immediate steps in development are to be determined. The team is looking for more collaborators as Agrawal is due to graduate within the next year.

“We’re going to be building more prototype models of this to hand out to those collaborators to do their own capability extensions,” Hayes said.

John Kosowatz is senior editor.

.png?width=854&height=480&ext=.png)