How to Teach Soft Robot Navigation

How to Teach Soft Robot Navigation

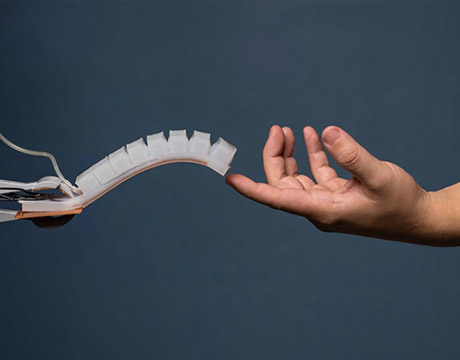

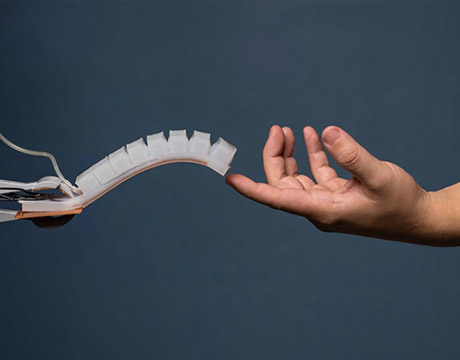

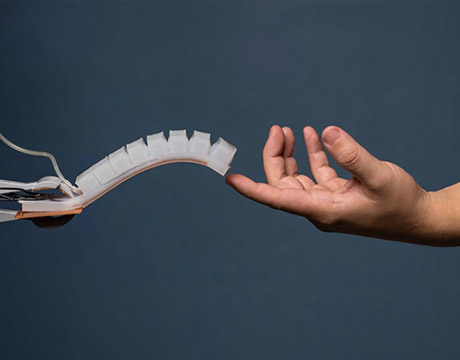

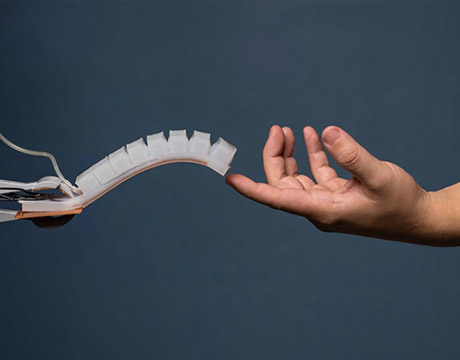

A soft robotic finger perceives using sensors and recurrent neural networks. Image: David Baillot / UC San Diego

Perception is a tricky thing. Humans use all their senses to learn about their location and the objects with which they interact. If one sense falters—say an eye or an ear—we can still navigate our world. Now, a team of engineers at University of California, San Diego, is seeking to create networks of sensors that would give robots the same redundancy without simply duplicating existing sensors.

This is especially important as robots become smarter and more mobile. Today, most robots are still rigid and designed for just a handful of tasks. They contain sensors, particularly at the joints, to monitor and track their movements. This produces predictable, reliable movements, but if one sensor fails, the whole system crashes.

Michael Tolley, a professor of mechanical and aerospace engineering who heads the Bioinspired Robotics and Design Lab at UC San Diego, wants to create a more redundant series of sensors by networking them together.

Equally important, he wants to do this in soft robots that can use their “senses” to explore new and unpredictable environments more safely than their rigid cousins.

Read more on Helpful Robots: Robots to the Rescue

“If a robot has to move around in the world, it’s not as simple as navigating a factory floor,” Tolley explained. “You want it to understand not just how it moves, but how other things are going to get around it.”

The team started with an under-utilized sense in the robotic arsenal: touch.

“There is a lot of very valuable information in the sense of touch,” Tolley said. “So a big part of my lab’s work involves designing soft robots that are inherently less dangerous [than rigid robots] and that can be put in places where you wouldn’t necessarily put a rigid manufacturing robot.”

Soft robots present a unique set of challenges, starting with something as simple as sensor placement. Tolley’s robot resembles a human finger, if it were made out of a rubber-like polymer. It does not offer much in the way of structure; there are no joints or other obvious places to place sensors.

Tolley and his team saw this as a creative opportunity to look at perception in a different, almost playful, kind of way.

Instead of running simulations to find the best places for the robot’s four strain sensors, his team placed them randomly. They then applied air pressure to the finger and the response from the four sensors was fed into a neural network, a type of machine learning system based on connections between nodes (in this case, sensors), to record and process the movements.

Researchers generally train neural networks, providing them with examples that they can match against their sensory input. UC San Diego engineers used a motion capture system to train the finger, then discarded it. This helped the robot learn how to react to different strain signals from the sensors.

This enabled the team to predict forces applied to the finger as well as simple movements. Ultimately, they would like to develop models that predict the complex combination of forces and deformations of soft robots as they move. This cannot be done today. Better models would enable engineers to optimize sensor design, placement, and fabrication for future soft robots.

In the meantime, the team has been learning how networked sensors behave.

Their most important finding was that the robot could still perform even after one of the four sensors stopped working. This is because the system does not depend on sensors in specific locations to monitor a particular function.

Instead, the neural network uses information from all the sensors in its network to complete a predictable movement. When a sensor fails, the network can still synthesize information, albeit with a little less accuracy.

This concept is called graceful degradation and is found throughout nature. For example, the bodies of aging humans become weaker and stiffer, but they can still walk, though perhaps more slowly than they once did.

Tolley’s team has many more challenges ahead. Their biggest obstacle is that their soft finger robot does not have a mechanical skeleton to push or lift things. Instead, they use a pneumatic system to pressurize it and generate mechanical force. In order to build larger models to carry out real tasks, they will need some kind of structure to transfer force.

With time, the team hopes to build out an entire system with hundreds of sensory components that feed into a neural network that uses touch plus vision and hearing to explore its environment.

“When we envision humans and robots working in the same place, that’s where I see a soft robot being useful,” Tolley said. “In the operating room, in search and rescue situations, and even in homes, helping people with disabilities get around.”

The dream is still many years off from commercialization. But, for Tolley, recreating human perception is worth the hard work.

“In a way, we’re just trying to understand ourselves,” he said. “At some level, humans are really just very complicated machines.”

Cassie Kelly is an independent writer.

.png?width=854&height=480&ext=.png)