4 Ways Technology Moves Art

4 Ways Technology Moves Art

Wormlike tubes observe and react to human interaction in Petting Zoo. Image: Courtesy of Minimaforms.

Lasers, algorithms, robots, artificial intelligence, and machine learning are standard tools and technologies used by engineers and scientists. But they are not exclusive to them. Technology always morphs into society, and artists are among the first to experiment and embrace its possibilities.

In the 19th century, Impressionists exploited a new creation, tin tubes of paint, that allowed them to move outdoors and expand their color palette without having to worry about paint drying before it could be used, or bursting from the pig bladders that were commonly used to store paint. In the 20th Century, Andy Warhol took the simple silkscreen and moved silkscreen painting into the vernacular. There are many other examples.

Now, in the 21st century, artists are using lasers and facial recognition technology to create interactive light displays, virtual reality for immersive experiences, and even artificial intelligence and algorithms to create computer-generated art…if it can be called art. But that is another discussion.

In a 2015 essay, the late David Featherstone, a professor of biology and neuroscience at the University of Illinois at Chicago, wrote, “Both science and art are human attempts to understand and describe the world around us. The subjects and methods have different traditions, and the intended audiences are different, but … the motivations and goals are fundamentally the same. Both artists and scientists strive to see the world in new ways and to communicate that vision. When they are successful, the rest of us suddenly ‘see’ the world differently. Our ‘truth’ is fundamentally changed.”

Here are four examples of technologies being embraced by artists moving into the third decade of the century.

Motion Sensing and Data Scanning

Camera tracking and data scanning systems allow wormlike, tubular structures suspended from the ceiling to react to people entering their space in an exhibit called Petting Zoo. Produced by the design group Minimaforms for a 2015 digital show in London, Petting Zoo is an immersive experience where the tubes change shape, color, retract or expand based on input from people. The greater number of people in the exhibit, the more the tubes change and react. Minimiforms calls it “a synthetic robotic environment of animalistic creatures.”

The studio is the work of Theodore Spyropoulos, an architect and educator, and his brother Stephen, an artist, interactive designer, vice president of product design at Compass, and a faculty member at Rutgers University. They founded Minimiforms in 2002 to explore how architecture and design can enable new forms of communication. Their interdisciplinary design work explores digital design and fabrication in ways to construct spaces of social and material interaction.

Reader's Choice: The Robot Will See You Now

The “pets” were designed to be able to learn and respond to the environment, rather than simply be reactive. Each of the tubes is fitted with a Kinect camera to locate and observe people. Using blob detection, each tube can process and interpret their positions and actions, and thus map and respond to the environment. Using a basic system of machine learning that mimics memory, each of the tubes forms behaviors that can change, based on context. And the longer the pet is active, the more advanced its movements and behaviors can become.

Lasers

Umbrellium is a London-based art cooperative using a variety of technologies to create participatory art. It has created large, outdoor works and exhibits using light and lasers. In a work titled Assemblage, it uses motion tracking, laser, audio, and tactile output systems to produce three-dimensional light structures indoors. An interactive piece, the structures are sculpted by a person’s movement and gestures. But the structures become more stable if people work as a group rather than individually.

Computer-controlled lasers produce three-dimensional light forms in the air that people manipulate with their bodies, hands, and feet. Motion-detecting sensors on the ceiling allow people to sculpt or destroy through gentle movements or sweeping gestures. The light structures are thinner and more fragile when only one person is working, but they become stronger when people hold hands and work as a group. The purpose is to encourage trust and collaboration between people in order to build a stronger structure.

Editor's Pick: 7 Top Applications of Lasers in Manufacturing

Robots

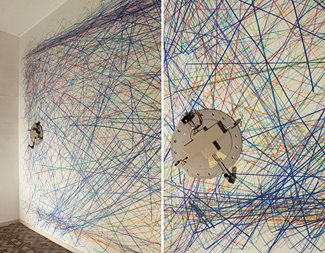

Robots are becoming ubiquitous in manufacturing and industry, and they are making their way into art. Two German artists, Michael Haas and Julian Adenauer, developed Vertwalker, a Roomba-looking machine that climbs vertically and paints through a software program, continually overwriting itself. They built the robot using 3D-printed parts. There is a vacuum on the bottom along with computer fans and two wheels. It uses an Arduino-based electronic platform.

Vertwaker can be programmed in different ways. In two separate shows, one of the robots moved vertically and moved to the left or the right to make a curve; the second was programmed to move toward the sound—both placed lines of paint forming abstract drawings through a pen. Colors are inspired by the location the robot is in, according to the artists. The bot has used up to nine colors programmed by the day. It can work up to three hours before its battery needs to be replaced.

Algorithms

Machine learning is progressing to the point where algorithms themselves are creating art. In 2018, a portrait by French art collective Obvious fetched $432,500 at auction. Christie’s called it the first portrait generated by an algorithm to come up for auction and estimated its worth—before the auction—at $7,000 to $10,000.

Unlike other pieces of art, Christie’s called the portrait “not the product of a human mind” but of an algorithm “defined by an algebraic formula.” To create it, the artists of Obvious loaded their algorithm with thousands of portraits to teach it form and overall aesthetics. It then created the portrait of a blurred face.

But some artists have been writing code to generate art for a half-century or so. Harold Cohen wrote the program AARON in 1973, an early artificial intelligence system that defined rules and forms from which a computer produced drawings. He developed AARON over the years, but it did not waver from the principle that the artist was directing the tasks. That premise is now morphing into the computer, analyzing thousands of images to learn an aesthetic, and then produce an image from that experience.

John Kosowatz is senior editor.

In the 19th century, Impressionists exploited a new creation, tin tubes of paint, that allowed them to move outdoors and expand their color palette without having to worry about paint drying before it could be used, or bursting from the pig bladders that were commonly used to store paint. In the 20th Century, Andy Warhol took the simple silkscreen and moved silkscreen painting into the vernacular. There are many other examples.

Now, in the 21st century, artists are using lasers and facial recognition technology to create interactive light displays, virtual reality for immersive experiences, and even artificial intelligence and algorithms to create computer-generated art…if it can be called art. But that is another discussion.

In a 2015 essay, the late David Featherstone, a professor of biology and neuroscience at the University of Illinois at Chicago, wrote, “Both science and art are human attempts to understand and describe the world around us. The subjects and methods have different traditions, and the intended audiences are different, but … the motivations and goals are fundamentally the same. Both artists and scientists strive to see the world in new ways and to communicate that vision. When they are successful, the rest of us suddenly ‘see’ the world differently. Our ‘truth’ is fundamentally changed.”

Here are four examples of technologies being embraced by artists moving into the third decade of the century.

Motion Sensing and Data Scanning

Camera tracking and data scanning systems allow wormlike, tubular structures suspended from the ceiling to react to people entering their space in an exhibit called Petting Zoo. Produced by the design group Minimaforms for a 2015 digital show in London, Petting Zoo is an immersive experience where the tubes change shape, color, retract or expand based on input from people. The greater number of people in the exhibit, the more the tubes change and react. Minimiforms calls it “a synthetic robotic environment of animalistic creatures.”

The studio is the work of Theodore Spyropoulos, an architect and educator, and his brother Stephen, an artist, interactive designer, vice president of product design at Compass, and a faculty member at Rutgers University. They founded Minimiforms in 2002 to explore how architecture and design can enable new forms of communication. Their interdisciplinary design work explores digital design and fabrication in ways to construct spaces of social and material interaction.

Reader's Choice: The Robot Will See You Now

The “pets” were designed to be able to learn and respond to the environment, rather than simply be reactive. Each of the tubes is fitted with a Kinect camera to locate and observe people. Using blob detection, each tube can process and interpret their positions and actions, and thus map and respond to the environment. Using a basic system of machine learning that mimics memory, each of the tubes forms behaviors that can change, based on context. And the longer the pet is active, the more advanced its movements and behaviors can become.

Lasers

Umbrellium is a London-based art cooperative using a variety of technologies to create participatory art. It has created large, outdoor works and exhibits using light and lasers. In a work titled Assemblage, it uses motion tracking, laser, audio, and tactile output systems to produce three-dimensional light structures indoors. An interactive piece, the structures are sculpted by a person’s movement and gestures. But the structures become more stable if people work as a group rather than individually.

Computer-controlled lasers produce three-dimensional light forms in the air that people manipulate with their bodies, hands, and feet. Motion-detecting sensors on the ceiling allow people to sculpt or destroy through gentle movements or sweeping gestures. The light structures are thinner and more fragile when only one person is working, but they become stronger when people hold hands and work as a group. The purpose is to encourage trust and collaboration between people in order to build a stronger structure.

Editor's Pick: 7 Top Applications of Lasers in Manufacturing

Robots

Robots are becoming ubiquitous in manufacturing and industry, and they are making their way into art. Two German artists, Michael Haas and Julian Adenauer, developed Vertwalker, a Roomba-looking machine that climbs vertically and paints through a software program, continually overwriting itself. They built the robot using 3D-printed parts. There is a vacuum on the bottom along with computer fans and two wheels. It uses an Arduino-based electronic platform.

Vertwaker can be programmed in different ways. In two separate shows, one of the robots moved vertically and moved to the left or the right to make a curve; the second was programmed to move toward the sound—both placed lines of paint forming abstract drawings through a pen. Colors are inspired by the location the robot is in, according to the artists. The bot has used up to nine colors programmed by the day. It can work up to three hours before its battery needs to be replaced.

Algorithms

Machine learning is progressing to the point where algorithms themselves are creating art. In 2018, a portrait by French art collective Obvious fetched $432,500 at auction. Christie’s called it the first portrait generated by an algorithm to come up for auction and estimated its worth—before the auction—at $7,000 to $10,000.

Unlike other pieces of art, Christie’s called the portrait “not the product of a human mind” but of an algorithm “defined by an algebraic formula.” To create it, the artists of Obvious loaded their algorithm with thousands of portraits to teach it form and overall aesthetics. It then created the portrait of a blurred face.

But some artists have been writing code to generate art for a half-century or so. Harold Cohen wrote the program AARON in 1973, an early artificial intelligence system that defined rules and forms from which a computer produced drawings. He developed AARON over the years, but it did not waver from the principle that the artist was directing the tasks. That premise is now morphing into the computer, analyzing thousands of images to learn an aesthetic, and then produce an image from that experience.

John Kosowatz is senior editor.

.png?width=854&height=480&ext=.png)