Social Insects Inspire Design of Autonomous Robots

Social Insects Inspire Design of Autonomous Robots

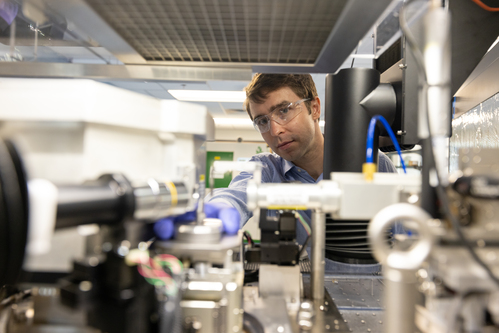

Individual ants have simple behaviors but perform complex tasks by working together.

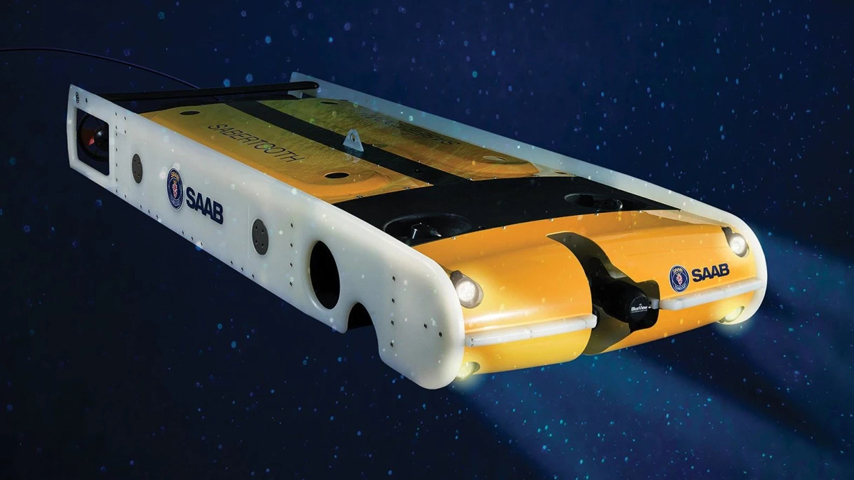

Robots have overrun Hanover Fair, the world’s largest industrial exposition held annually in Germany. Exhibitors display hundreds of robotic arms, actuators, sensor systems, and feedback mechanisms.

The growing presence of autonomous robots at Hanover reflects worldwide interest in robots that can clean house, fight wars, or serve as personal assistants—all by themselves.

With autonomous robots, developers must wrestle with the most vexing of control questions: How can a robot be made intelligent enough to operate without direct guidance? That goal is very different from programming an automated control system in a factory, where every piece of equipment and every possible interaction is understood ahead of time.

A truly autonomous robot must be able to interact with a constantly changing—and therefore, unknown—environment.

Learning from Ants

Once, theorists thought autonomous robots needed artificial intelligence—the ability to assess the environment and make informed judgments before acting. Today, developers feel that type of “thinking” is far too slow for real-time decision making. Instead, engineers are looking for clues in the behavior of social insects.

“When simple behaviors work together, they can create what appear to be complex, problem-solving behaviors,” noted Bryan Adams. Adams is a principal investigator at iRobot in Bedford, MA, which developed Roomba, the world’s most widely owned commercial robot—and one inspired by the behavior of ants.

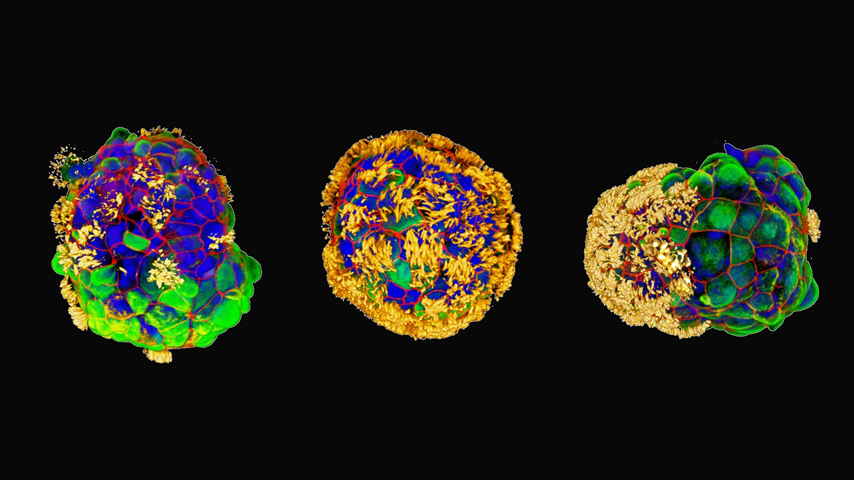

Consider how some species of ants find the shortest route to food. When a randomly foraging ant finds food, it grabs a piece and wanders back to the nest, leaving behind a trail of pheromones.

The ants that find the most direct route to the food and back leave the strongest scent, and their trail is the easiest to follow.

At the highest level, this looks like rational—even intelligent—behavior. Yet it derives from rudimentary instincts: walk randomly until you find food, bring the food back to the nest, follow the strongest scent back to food, and repeat.

Such an approach guides Roomba, a disk on wheels that scurries around furniture and backs away from walls while randomly vacuuming rooms. Roomba’s instruction set looks something like: wander and vacuum, go left or right upon hitting an object, back up or spiral when caught in a corner, and find the docking station to recharge when low on power.

Learning Smart Behavior

Can robots actually learn behaviors with real intelligence? And can they do it with simple commands that enable them to function in the real world?

Stefan Wrobel thinks so. Wrobel, director of the Fraunhofer Institute for Intelligent Analysis and Information Systems near Bonn, Germany, is active in RoboCup competitions—soccer matches played by robots. “In robotics,” Wrobel explained while scores of students put the finishing touches on their RoboCup contenders, “behaviors are just the simplest building blocks. Robots that adapt to the environment go beyond manifesting behaviors: they learn while performing tasks.”

At the least complicated level, a robot could use simple behaviors to confirm its model of the environment. “When searching for power, if there is no power source in the northwest corner, it could remember that,” Wrobel said.

On a more sophisticated level, a robot could assign priorities to its own behaviors, such as when to move or what to grab. According to Wrobel, designers give robots goals, and when the robot does something right, it receives a numerical credit as a reward.

Teamwork

Hanover’s RoboCup matches have a long-term goal: to develop a team of robots capable of winning a soccer match against human opponents by 2050. Humanoid robots show themselves to be limited: they must stop before kicking the ball; they cannot pass effectively; few can lift themselves off the ground; they are also painfully slow.

Wheeled robots, however, speed around the field, bumping the ball to one another and then finally bouncing it towards the goal. The robots can work together and still make rapid decisions because they are organized hierarchically.

“Robots have to react to different things as individual robots and as team players,” said Sven Behnke, head of Bonn’s Autonomous Intelligent Systems Group.

Each of Behnke’s robots have four levels of control: the entire team, an individual robot, isolated body parts, and single joints. At the team level, the robots are focused on plans for the immediate future, while at the individual level, they react more to their environment.

Simple and reactive are good, but consistent is even better. That begins with physical robustness. For every minute of RoboCup play, there are untold hours of preparation and recalibration. At tables around the playing field, scores of students are repairing, upgrading, or salvaging robots.

“Winning boils down to consistency,” said Ericson Mar, who teaches robotics at Cooper Union in New York City. “The robots that win are the ones that can do the task over and over again.”

Now that engineers have developed robots to the level of ants, can they lift robotic sophistication to the next evolutionary step? Many challenges lie ahead.

[Adapted from “From Simple Rules, Complex Behavior” by Alan S. Brown, Associate Editor, Mechanical Engineering, July 2009.]

When simple behaviors work together, they can create what appear to be complex, problem-solving behaviors.Bryan Adams, principal investigator, iRobot