This Robot Learns by Watching

This Robot Learns by Watching

Researchers at Carnegie Melon have created an algorithm that lets robots learn by just watching.

Right now, only serious robots are really helpful to humans: the kind that need hours of programming and perform repetitive tasks on the assembly line. We have yet to create true robot servants to make our coffee, take out our garbage, massage our feet. The reason is simple: they’re just too hard to teach.

Now, a group of researchers at Carnegie Melon have created an algorithm that lets robots learn by just watching. After just a single human-performed demonstration of, say, pulling out a drawer, a robot equipped with the algorithm will make an attempt at reproducing the action. And if it fails, it learns from its mistakes, repeatedly trying till it gets it right.

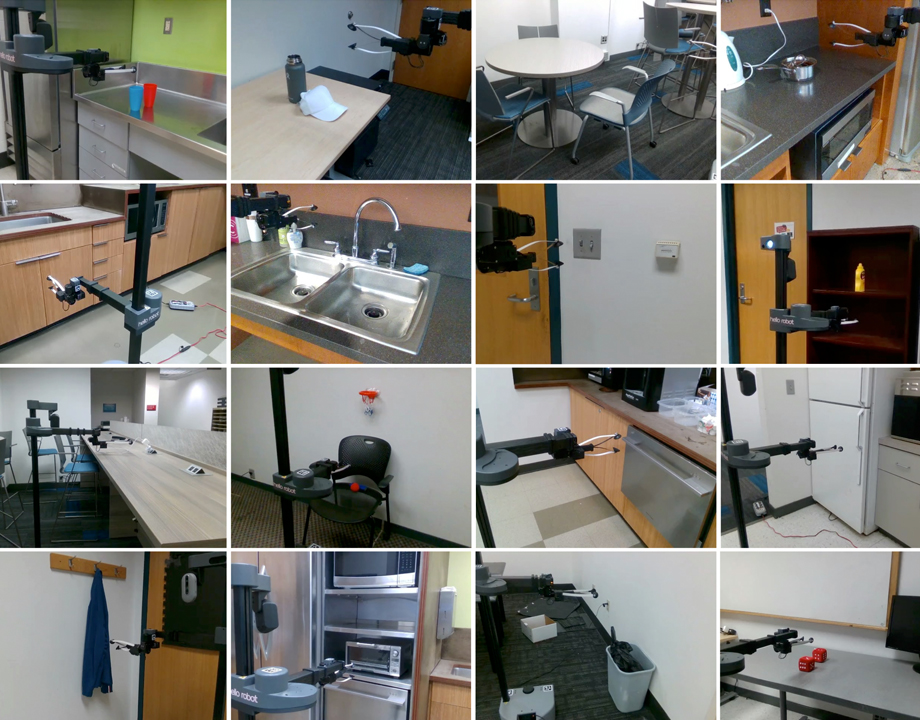

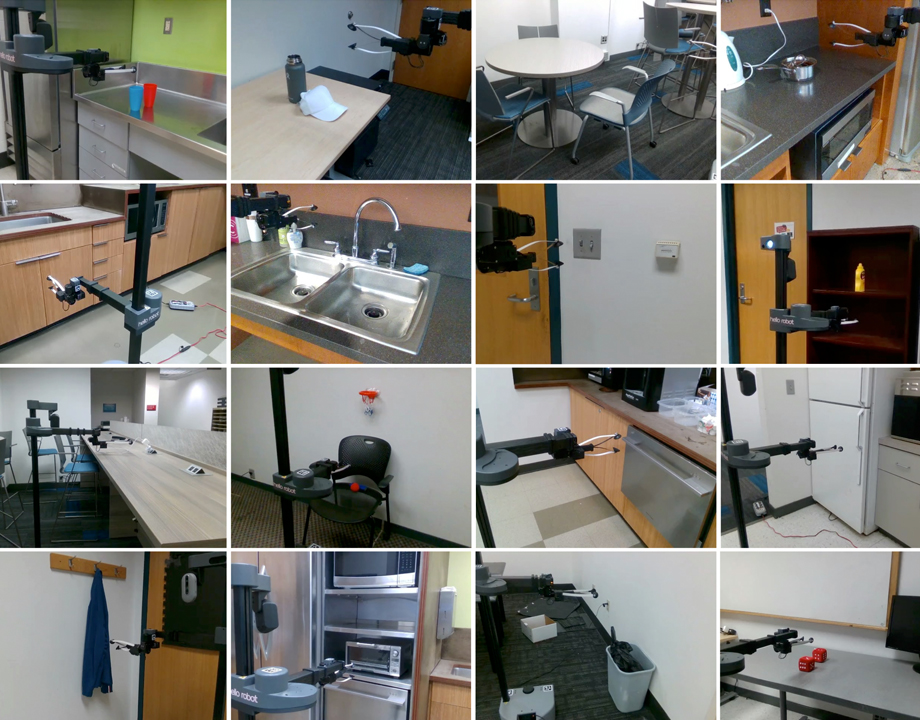

What’s more, it can perform these imitative operations in real kitchens and offices, not just in the sterile environment of a lab.

“When it comes to getting robots to do different tasks, we’re always focused on quasi-static, simple, tabletop setups in very controlled settings,” said Shikhar Bahl, a Ph.D. student spearheading the research at the university’s Robotics Institute. “I’m interested in building algorithms for robots to interact in different types of environments and different types of homes—in the wild, basically.”

Roboticists hoping to create autonomous machines that can work in the real world, with all its complexities and irregularities, are often stymied by a kind of chicken and egg problem: to get a robot to operate autonomously, you need a lot of data, but to collect a lot of data, a robot needs a certain amount of autonomy. Bahl sidestepped the issue by looking at another way of learning. “We were inspired by how children learn,” he said. “They watch everything around them.”

Related: Why Robots Should Develop Human-like Skills

Similarly, Bahl’s robot sees the state of a room before human manipulation and compares it to what it sees after human manipulation. No other goal is input in any other way. It then imitates the human behavior to achieve the end result.

The robot is programmed to pay careful attention to the hands and arms of the person showing it what to do before trying the same movements with its own appendages. If after mimicking what it saw the result is not same, it tries again until it achieves the intended goal.

“There’s very little chance it will be successful after just observing,” said Bahl. “Once it knows approximately what the human is doing it builds an exploration scheme around it. We want the robot to autonomously get better and better at the task.”

More for You: What the Home of the Future Is Missing Today

The method, called WHIRL (In-the-Wild Human Imitating Robot Learning), has allowed a co-bot to first watch and then learn how to open doors and drawers and close them halfway, fold shirts, clean whiteboards, turn on faucets, put a cup inside another cup, and—yes—take out the garbage, among many other tricks.

At the moment, a WHIRL-controlled robot has some limitations. For one thing, it can’t judge by sight alone whether an object might be too heavy for its gripper. It also is not skilled enough to imitate meticulous, detailed, or painstaking tasks like knitting or whisking an egg. That is no fault of the robot’s hardware, it just reflects the current abilities of computer vision systems. As they get better, so will WHIRL.

Bahl is also working to advance WHIRL so that it learns not just from live demonstrations, but by searching YouTube videos as well. It could then have a near-infinite abilities.

More Like This: 10 Humanoid Robots of 2020

Such an adept imitator might have a use in manufacturing as well. “Maybe not on an assembly line, where you need very organized and very controlled programming,” says Bahl. “But maybe where people are hand designing different aspects of a product. I’ve hand-held many robots to tell them what to do. It’s useful, but it’s very, very time consuming.”

As a helper in the home, WHIRL is not the only aspiring assistant. Google’s Everyday Robotics is also an attempt to create a personal robotic aid for everyday tasks. Their model, though, is language based, where the task matches one it has in its database, like cleaning up a spill.

“These ideas could be nicely merged,” said Bahl. “You could have a high-level language model that tells the robot what to do, and it searches on YouTube to learn low-level skills.”

Read Further: A Robot for the Every-factory

Bahl also hopes to create a way for humans to correct a robot if it’s not doing things right. He wants to add other inputs like touch, language and audio. And he wants WHIRL to learn for the long term.

“If I do something in one kitchen, can I do it in another kitchen?” he wonders. “We want it to be able to operate in any different scene.”

Michael Abrams is a science and technology writer in Westfield, N.J.

Now, a group of researchers at Carnegie Melon have created an algorithm that lets robots learn by just watching. After just a single human-performed demonstration of, say, pulling out a drawer, a robot equipped with the algorithm will make an attempt at reproducing the action. And if it fails, it learns from its mistakes, repeatedly trying till it gets it right.

What’s more, it can perform these imitative operations in real kitchens and offices, not just in the sterile environment of a lab.

“When it comes to getting robots to do different tasks, we’re always focused on quasi-static, simple, tabletop setups in very controlled settings,” said Shikhar Bahl, a Ph.D. student spearheading the research at the university’s Robotics Institute. “I’m interested in building algorithms for robots to interact in different types of environments and different types of homes—in the wild, basically.”

Roboticists hoping to create autonomous machines that can work in the real world, with all its complexities and irregularities, are often stymied by a kind of chicken and egg problem: to get a robot to operate autonomously, you need a lot of data, but to collect a lot of data, a robot needs a certain amount of autonomy. Bahl sidestepped the issue by looking at another way of learning. “We were inspired by how children learn,” he said. “They watch everything around them.”

Related: Why Robots Should Develop Human-like Skills

Similarly, Bahl’s robot sees the state of a room before human manipulation and compares it to what it sees after human manipulation. No other goal is input in any other way. It then imitates the human behavior to achieve the end result.

The robot is programmed to pay careful attention to the hands and arms of the person showing it what to do before trying the same movements with its own appendages. If after mimicking what it saw the result is not same, it tries again until it achieves the intended goal.

“There’s very little chance it will be successful after just observing,” said Bahl. “Once it knows approximately what the human is doing it builds an exploration scheme around it. We want the robot to autonomously get better and better at the task.”

More for You: What the Home of the Future Is Missing Today

The method, called WHIRL (In-the-Wild Human Imitating Robot Learning), has allowed a co-bot to first watch and then learn how to open doors and drawers and close them halfway, fold shirts, clean whiteboards, turn on faucets, put a cup inside another cup, and—yes—take out the garbage, among many other tricks.

At the moment, a WHIRL-controlled robot has some limitations. For one thing, it can’t judge by sight alone whether an object might be too heavy for its gripper. It also is not skilled enough to imitate meticulous, detailed, or painstaking tasks like knitting or whisking an egg. That is no fault of the robot’s hardware, it just reflects the current abilities of computer vision systems. As they get better, so will WHIRL.

Bahl is also working to advance WHIRL so that it learns not just from live demonstrations, but by searching YouTube videos as well. It could then have a near-infinite abilities.

More Like This: 10 Humanoid Robots of 2020

Such an adept imitator might have a use in manufacturing as well. “Maybe not on an assembly line, where you need very organized and very controlled programming,” says Bahl. “But maybe where people are hand designing different aspects of a product. I’ve hand-held many robots to tell them what to do. It’s useful, but it’s very, very time consuming.”

As a helper in the home, WHIRL is not the only aspiring assistant. Google’s Everyday Robotics is also an attempt to create a personal robotic aid for everyday tasks. Their model, though, is language based, where the task matches one it has in its database, like cleaning up a spill.

“These ideas could be nicely merged,” said Bahl. “You could have a high-level language model that tells the robot what to do, and it searches on YouTube to learn low-level skills.”

Read Further: A Robot for the Every-factory

Bahl also hopes to create a way for humans to correct a robot if it’s not doing things right. He wants to add other inputs like touch, language and audio. And he wants WHIRL to learn for the long term.

“If I do something in one kitchen, can I do it in another kitchen?” he wonders. “We want it to be able to operate in any different scene.”

Michael Abrams is a science and technology writer in Westfield, N.J.