Smarter Drone Navigation

Smarter Drone Navigation

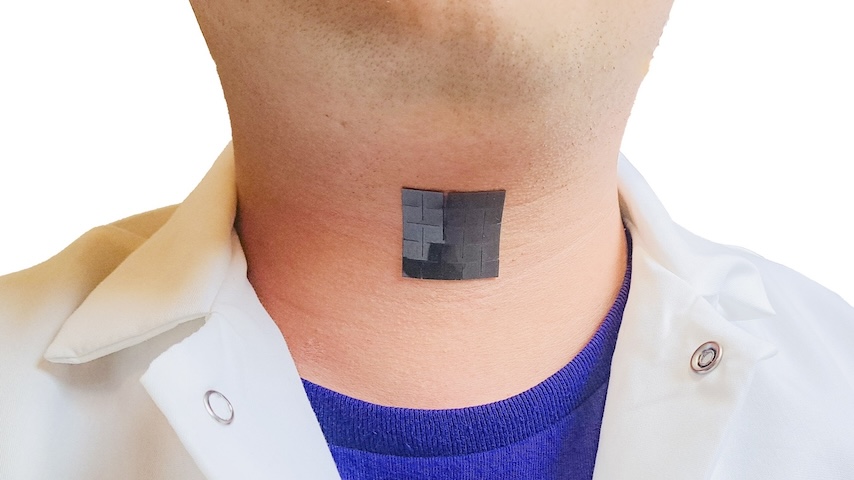

By using QR codes as landmarks, the ROUNDS navigational software allows drones to operate inside manufacturing facilities safely. Photo: Idaho National Laboratory

In 2008, automakers unveiled the first cars that could automatically detect and avoid a nose-to-tail crash without any intervention from the driver.

Since then, self-driving car technology has made some significant advancements. In some cities, fully autonomous vehicles are literally right around the corner—the world’s first self-driving taxi service is operational in a suburb of Phoenix.

Likewise, aerial drone technology has advanced to the point that drones can now or will soon deliver packages, collect measurements and perform other jobs with minimal human guidance.

Until now, drones have primarily relied on Global Positioning System devices to navigate. On the other hand, self-driving cars use a combination of sensors including lidar, radar, and cameras, in addition to GPS, all managed by sophisticated computer systems.

These systems include machine learning algorithms that allow the vehicle to recognize objects on the road so that the car can predict the behavior of those objects and react accordingly. This ability to recognize objects is crucial. Only by knowing that a child kicked a ball toward the road can a self-driving car make an appropriate decision about avoiding the ball and stopping for the child.

The Idaho National Laboratory researchers are using a similar, targeted version of this image recognition technology to solve a similarly tough challenge. Their research will answer how an off-the-shelf, camera-equipped drone accurately finds its way through tight industrial spaces where a Global Position System is unavailable.

INL researchers developed the Route Operable Unmanned Navigation of Drones (ROUNDS) to help a wide range of industries save time, reduce cost, and increase efficiency while reducing human exposure to heights, chemicals, radiation, and other hazards.

With ROUNDS, drones could perform security patrols in conjunction with human security guards, they could check inventory in a warehouse, or they could read hard-to-reach gauges in a factory or power plant.

ROUNDS uses the drone’s camera to identify visual cues that are placed into the environment at known locations, like following breadcrumbs through a forest. At INL, drones equipped with ROUNDS follow QR codes, but other visual cues work, too.

What makes ROUNDS different from the image recognition technologies found in self-driving cars is that the features are intentionally inserted for autonomous navigation.

“The way autonomous vehicles operate is that you train them to look for certain objects,” said Dr. Ahmad Al Rashdan, a senior research and development scientist at INL. He developed the ROUNDS technology for the U.S. Department of Energy. “You train the autonomous navigation system to look for a stop sign, for instance. Everything gets classified; it has to figure out what it is looking at and make a decision.”

By simplifying that process to look for a single feature that is designed to be distinctive from the drone’s environment, such as a QR code, Al Rashdan and his colleagues have created a drone navigation system that can identify its location in the environment. Using the QR code as a landmark can perform a job and then move to the following QR code very quickly.

“We know the route that the drone has to take,” he said. “And we are inserting known images at known locations in the environment that are easy to identify. There are multiple benefits. For instance, we are just looking for the QR code in this case. We don’t have to train for other objects that might be seen. Because it is only one distinctive object, we can recognize it and operate much faster. The rate of missed QR codes is going to be much lower.”

ROUNDS uses artificial intelligence and machine learning to accurately locate the drone within its environment by analyzing the relative shape of the QR codes. Like the human eye, the drone’s camera perceives changes in the form of an object as it is viewed from different angles.

ROUNDS measures the angles and dimensions of the QR code and extrapolates its relative location, determining its place in the environment within inches of its true position. The software processes the QR code and determines the location in less than a hundred milliseconds using a normal computer. Every time the drone calculates its relative location, high-performance controllers, typically used for rockets and satellite applications, update the drone’s trajectory.

When the following QR code becomes visible, ROUNDS identifies it and again establishes the drone’s location, feeding that information into the controller to continuously adjust trajectory. This process repeats until the drone has completed its mission.

“The addition of predetermined visual cues enables the drone to find them quickly,” Al Rashdan said. “The QR codes also establish absolute location references since we already know where the visual cues are inserted.”

That simplicity gives ROUNDS advantages of cost, accuracy and speed.

The image analysis and control systems of both navigation approaches require less than a hundred milliseconds using normal computer power. “This allows the drones to navigate quickly, covering much more distance and reaching far more locations before it returns to the charging pad,” Al Rashdan said.

ROUNDS holds another significant benefit: It’s easy to modify off-the-shelf drones to use the technology. Retrofitting a standard commercial drone to use environment mapping using lidar or Wi-Fi triangulation typically requires expensive equipment and expertise. ROUNDS requires no modifications to the drone itself or software configuration since you can embed the navigation information in the QR code. The ROUNDS software is simply installed on a standard computer that communicates wirelessly with the drone.

“People said, ‘This is too simple to work.’ But the simplest solution is almost always the best solution,” Al Rashdan said. “Still, this simple solution would not be possible without powerful image processing, machine learning, and control technologies.”

Cory Hatch is a science writer at the Idaho National Laboratory.

Since then, self-driving car technology has made some significant advancements. In some cities, fully autonomous vehicles are literally right around the corner—the world’s first self-driving taxi service is operational in a suburb of Phoenix.

Likewise, aerial drone technology has advanced to the point that drones can now or will soon deliver packages, collect measurements and perform other jobs with minimal human guidance.

Until now, drones have primarily relied on Global Positioning System devices to navigate. On the other hand, self-driving cars use a combination of sensors including lidar, radar, and cameras, in addition to GPS, all managed by sophisticated computer systems.

These systems include machine learning algorithms that allow the vehicle to recognize objects on the road so that the car can predict the behavior of those objects and react accordingly. This ability to recognize objects is crucial. Only by knowing that a child kicked a ball toward the road can a self-driving car make an appropriate decision about avoiding the ball and stopping for the child.

The Idaho National Laboratory researchers are using a similar, targeted version of this image recognition technology to solve a similarly tough challenge. Their research will answer how an off-the-shelf, camera-equipped drone accurately finds its way through tight industrial spaces where a Global Position System is unavailable.

INL researchers developed the Route Operable Unmanned Navigation of Drones (ROUNDS) to help a wide range of industries save time, reduce cost, and increase efficiency while reducing human exposure to heights, chemicals, radiation, and other hazards.

With ROUNDS, drones could perform security patrols in conjunction with human security guards, they could check inventory in a warehouse, or they could read hard-to-reach gauges in a factory or power plant.

Following Breadcrumbs Through the Forest

ROUNDS uses the drone’s camera to identify visual cues that are placed into the environment at known locations, like following breadcrumbs through a forest. At INL, drones equipped with ROUNDS follow QR codes, but other visual cues work, too.

What makes ROUNDS different from the image recognition technologies found in self-driving cars is that the features are intentionally inserted for autonomous navigation.

“The way autonomous vehicles operate is that you train them to look for certain objects,” said Dr. Ahmad Al Rashdan, a senior research and development scientist at INL. He developed the ROUNDS technology for the U.S. Department of Energy. “You train the autonomous navigation system to look for a stop sign, for instance. Everything gets classified; it has to figure out what it is looking at and make a decision.”

By simplifying that process to look for a single feature that is designed to be distinctive from the drone’s environment, such as a QR code, Al Rashdan and his colleagues have created a drone navigation system that can identify its location in the environment. Using the QR code as a landmark can perform a job and then move to the following QR code very quickly.

“We know the route that the drone has to take,” he said. “And we are inserting known images at known locations in the environment that are easy to identify. There are multiple benefits. For instance, we are just looking for the QR code in this case. We don’t have to train for other objects that might be seen. Because it is only one distinctive object, we can recognize it and operate much faster. The rate of missed QR codes is going to be much lower.”

Accurately Locating the Drone in Its Environment

ROUNDS uses artificial intelligence and machine learning to accurately locate the drone within its environment by analyzing the relative shape of the QR codes. Like the human eye, the drone’s camera perceives changes in the form of an object as it is viewed from different angles.

ROUNDS measures the angles and dimensions of the QR code and extrapolates its relative location, determining its place in the environment within inches of its true position. The software processes the QR code and determines the location in less than a hundred milliseconds using a normal computer. Every time the drone calculates its relative location, high-performance controllers, typically used for rockets and satellite applications, update the drone’s trajectory.

When the following QR code becomes visible, ROUNDS identifies it and again establishes the drone’s location, feeding that information into the controller to continuously adjust trajectory. This process repeats until the drone has completed its mission.

“The addition of predetermined visual cues enables the drone to find them quickly,” Al Rashdan said. “The QR codes also establish absolute location references since we already know where the visual cues are inserted.”

Advantages of Navigating with Images

That simplicity gives ROUNDS advantages of cost, accuracy and speed.

The image analysis and control systems of both navigation approaches require less than a hundred milliseconds using normal computer power. “This allows the drones to navigate quickly, covering much more distance and reaching far more locations before it returns to the charging pad,” Al Rashdan said.

ROUNDS holds another significant benefit: It’s easy to modify off-the-shelf drones to use the technology. Retrofitting a standard commercial drone to use environment mapping using lidar or Wi-Fi triangulation typically requires expensive equipment and expertise. ROUNDS requires no modifications to the drone itself or software configuration since you can embed the navigation information in the QR code. The ROUNDS software is simply installed on a standard computer that communicates wirelessly with the drone.

“People said, ‘This is too simple to work.’ But the simplest solution is almost always the best solution,” Al Rashdan said. “Still, this simple solution would not be possible without powerful image processing, machine learning, and control technologies.”

Cory Hatch is a science writer at the Idaho National Laboratory.