How a Jenga-playing Robot Will Affect Manufacturing

How a Jenga-playing Robot Will Affect Manufacturing

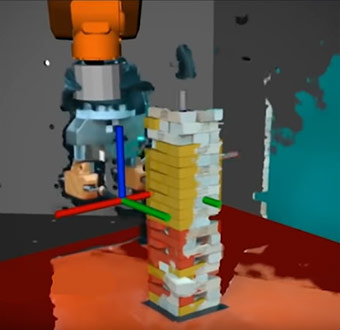

The Jenga-playing robot is a successful example of AI moving into physical world. Image: MIT

Humans are born with intuitive abilities to manipulate physical objects, honing and perfecting their skills from an early age through play and practice. But it's not as easy for robots.

Computers may have beaten the best human players of abstract, cognitive-based games such as chess and Go, but the physical abilities and intuitive perceptions acquired over millions of years of evolution still give humans a definite edge in tactile perception and the manipulation of real-world objects.

That human-machine skill gap makes it difficult to develop machine learning, or ML, algorithms for physical tasks that require not just visual information, but tactile data. A team of researchers in the Department of Mechanical Engineering of the Massachusetts Institute of Technology has taken a fresh approach to that problem by teaching a robot to play Jenga. Their work was published in Science Robotics.

Read more on Engineers Teaching Machines: Game Theory Helps Robot Design

Jenga involves building a layered tower by carefully moving rectangular blocks from lower layers to the top, without collapsing the tower. That requires careful consideration of which blocks to move and which to leave in place, a choice that's not always apparent.

“In the game of Jenga, a lot of the information that is necessary to drive the motion of the robot isn't apparent to the eyes, in visual information,” noted the study’s co-author Alberto Rodriguez. “You cannot point a camera at the tower and tell which blocks are free to move or which blocks are blocked. The robot has to go and touch them.”

The researchers overcame that physical element of the game by providing a means for the robot to integrate both visual and tactile cues.

The experimental setup consisted of an off-the-shelf ABB IRB 120 robotic arm fitted with an ATI Gamma force and torque sensor at the wrist and an Intel RealSense D415 camera, along with a custom-built gripper.

“Our system ‘feels’ the pieces as it pushes against them,” said Nima Fazeli, an MIT graduate student and the paper's lead author. “It integrates this information with its visual sensing to produce hypotheses as to the type of block interaction, block configurations, and then decides what actions to take.”

Listen to the latest episode of ASME TechCast: Breakthrough Could Bring New Cancer Treatment

Most modern ML algorithms begin by defining a problem as "the world is like this, what should be my next move?" Rodriguez said. A typical strategy would be to simulate every possible outcome of how the robot might interact with every Jenga block and the tower, a “bottom-up” approach. But this would be both time-consuming and data intensive, requiring enormous computational power.

Playing Jenga is a real-world physical task in which every possible move can have various outcomes. “It's very difficult to come up with a simulator version that can be used to train a machine-learning algorithm,” Rodriguez said. Training the algorithm is even more complicated by the fact that every time the robot makes a wrong move and the tower collapses a human being has to rebuild the structure before the robot can try again.

Instead, MIT engineers decided on a "top-down" learning methodology that would more closely simulate the human learning process. “The intent is to build systems that are able to make useful abstractions (top-down) that they can use to learn manipulation skills quickly,” Fazeli said. “In this approach, the robot learns about the physics and mechanics of the interaction between the robot and the tower.”

Read about another Robotic Invention: The Rise from BattleBot to Corporate Robot

Rather than going through thousands of possibilities, Fazeli and his colleagues trained the robot on about 300, then grouped similar outcomes and measurements into "clusters" that the ML algorithm could then use to model and predict future moves.

“[The AI] builds useful abstractions without being told what those abstractions are, i.e., it learns that different types of blocks that are stuck or free exist,” Fazeli said. “It uses this information to plan and control its interactions. This approach is powerful because we can change the goal of the robot and it can keep using the same model. For example, we can ask that it identify all blocks that don't move, and it can just do that without needing to retrain. It is also data-efficient thanks to its representation that allows for abstractions.”

By using the visual and tactile information collected through its camera and force sensor, the robot is thus able to learn from experience and plan future actions.

While the system won't be challenging human Jenga champs anytime soon, the top-down ML approach demonstrated in this work may have a more significant impact.

“We're looking toward industrial automation where we hope to have flexible robotic systems that can quickly acquire novel manipulation skills and behave reactively to their mistakes,” Fazeli said. “Modern day assembly lines change rapidly to align with consumer interests so we need systems that can keep up.”

Mark Wolverton is an independent writer.

Read Latest Exclusive Stories from ASME.org:

Young Engineer Takes Great Strides with Prosthetic Foot

VR and Drone Technology in a Paper Airplane

Five Job Interview Questions Young Engineers Can Expect

It's almost certainly cheaper to capture carbon emissions from their source—or never emit them in the first place.Matt Lucas, Carbon180